EzyFox Server Scale Up

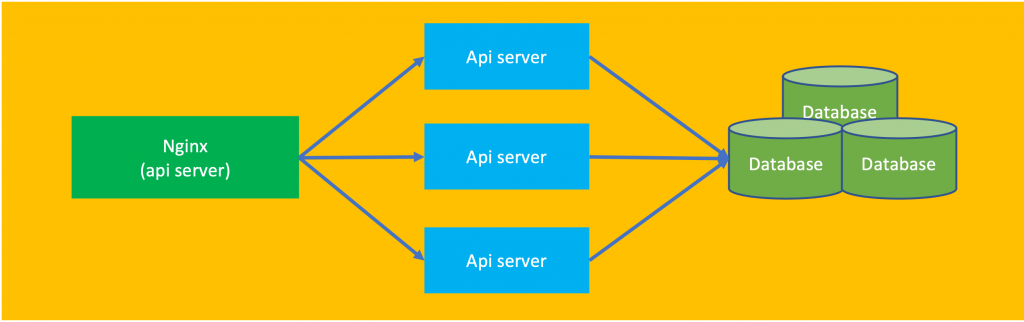

Updated at 1699804121000Normally, we are usually working with HTTP and it is not too difficult to scale with HTTP, because HTTP is a stateless protocol, it means the client and the server will disconnect each other after a request be completed. So maybe we just need simply increase the number of servers according to the model below, which can partly solve the problem:  But with socket it is different, it is a statefull protocol, the server and the client will keep a connection, but the problem is: a network card has only a maximum of 65535 ports so it arises many problems, but there are still solvable.

But with socket it is different, it is a statefull protocol, the server and the client will keep a connection, but the problem is: a network card has only a maximum of 65535 ports so it arises many problems, but there are still solvable.

But with socket it is different, it is a statefull protocol, the server and the client will keep a connection, but the problem is: a network card has only a maximum of 65535 ports so it arises many problems, but there are still solvable.

But with socket it is different, it is a statefull protocol, the server and the client will keep a connection, but the problem is: a network card has only a maximum of 65535 ports so it arises many problems, but there are still solvable.1. Traditional solution

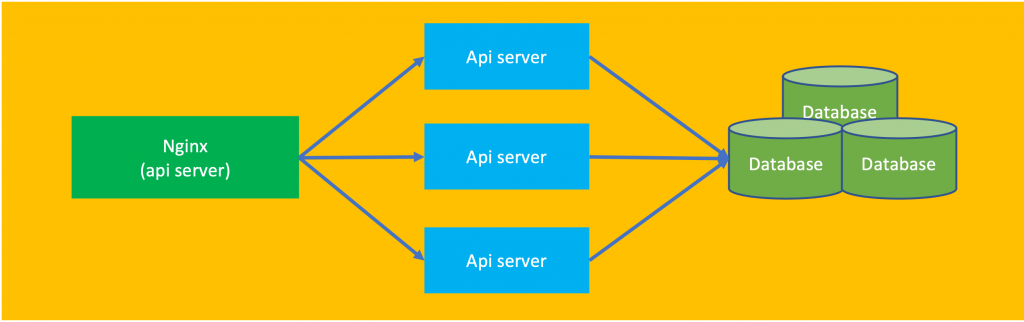

By using the traditional model, we will have:

- Load balancer (LB): for load balancing and ensure High availability (HA)

- EzyFox Servers: socket servers to handle user requests

Problem

We will have a lot of clients be connecting to the load balancer (nginx), maybe hundreds of thousands, even millions, and a socket server like ezyfox server itself can handle hundreds of thousands CCUs with a suitable configuration. But for 1 network card, it just has 65,000 ports, it can only meet 65,000 CCUs, so no matter how strong the server socket is, the system will only respond. can only meet 65,000 users. Why? Because when a client connects to the LB, it needs to keep the connection, the LB must open 1 port to connect to the server socket, each port will represent 1 client.

Solution

To solve this problem, we can plug more network cards, or buy an elastic sever solution with elastic network interfaces to provide multiple IPs for the load balancer. However, currently the number of IPv4s is limited. so IP allocation is not simple, perhaps we should only grant a maximum of 50 IPs and this solution will be suitable for systems serving less than 3 million CCUs only.

Props

Basically, this solution is quite simple and familiar with what we have been doing for many years, we can reuse the existing infrastructure without additional support services.

Cons

Quite expensive network card and IP and can only meet a certain number of CCUs. Moreover having only 1 LB server is also make a bottleneck to the whole system.

2. Microservices solution

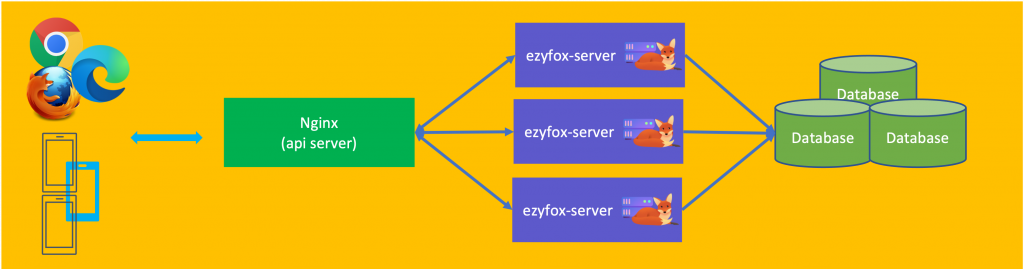

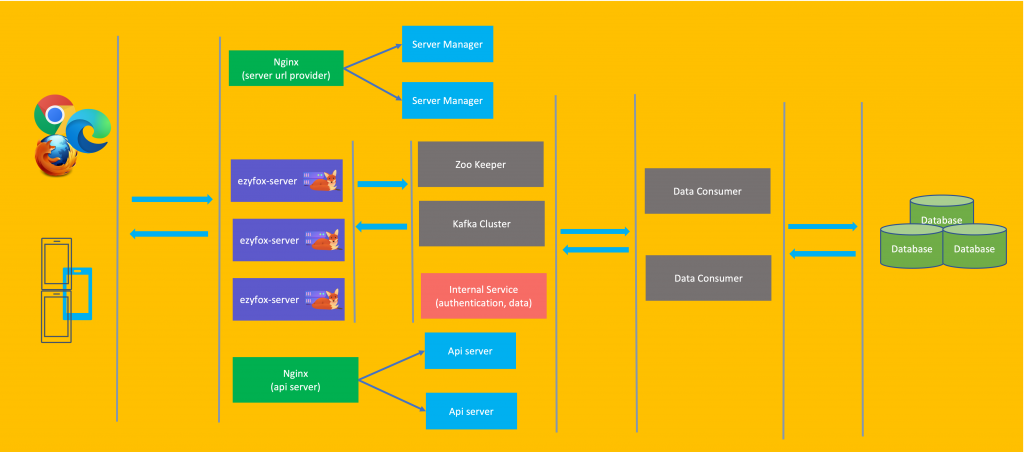

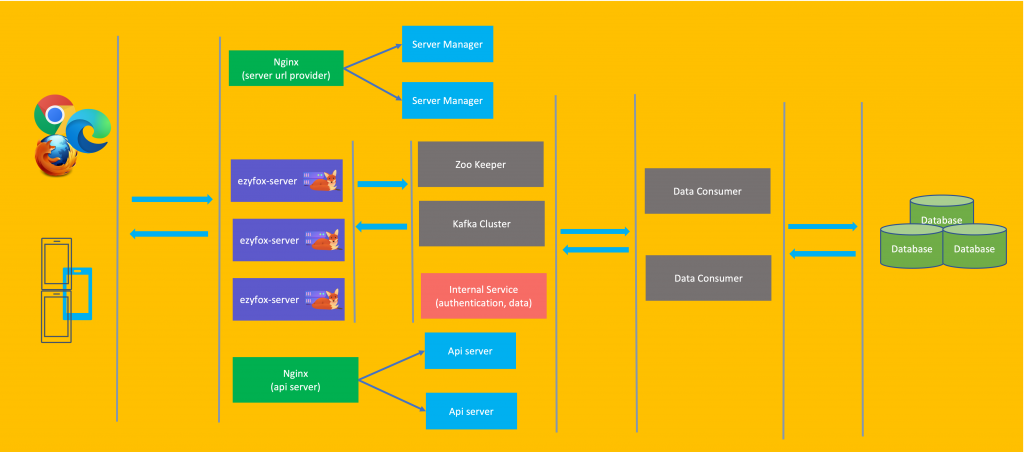

The problem with the traditional solution is that we are dependent on the capabilities of the LB, so the idea now is to remove the LB to take advantage of the full power of the socket server.  According to this model, we will have the following components:

According to this model, we will have the following components:

According to this model, we will have the following components:

According to this model, we will have the following components:- Server Manager: To manage and provide socket server information for clients to connect.

- EzyFox Server: Socket servers for clients to connect directly.

- Kafka and ZooKeeper: The message broker system supports the socket servers to exchange data and synchronize data to the database.

- Internal Service: is internal API system for the socket servers to call for authentication users or get data. In fact this system may not be needed, socket server can call database directly.

- Api server: the API system provides the client for heavy-duty, data-intensive tasks, in fact this system can also be dropped and the server socket can call the database directly.

Props

This solution helps us reduce the number of needed IPs. For example, each server socket can serve 1 million CCUs, with only 3 IPs, we can serve 3 million CCUs.

Cons

Relatively complicated to install, we have to install a service that provides server urls for clients, thereby increasing operating costs for businesses.

When do we need to scale up?

Not every game has thousands of concurrent players, so we don't need be hurry up to think about scale up. So is there a way to determine when to scale? That's when you monitor and see the amount of CCU reaching the system threshold, and you need an upgrade solution right away. However, if you want to determine the number of servers to scale from the beginning, you can use the 80/20 theory. Your system has N users, in a month with X = 20%N active users, 1 day has Y = 20%X active users and at a time there will be Z = 20%Y CCU, so when your system has 1 billion 250 million users then you need 10 servers for 10 million CCUs. This theory doesn't seem quite right, so check it out for yourself.

3. Summary

Basically, scaling socket server is not different from scaling HTTP server. But HTTP is simpler because it is a disconnection protocol so it is difficult to do all LB's port, so it's not require many IPs. In general, by the time we have to consider the plan to scale up the socket server system, we must be a relatively large business with hundreds of thousands of users already, so don't worry too much at the early stages of project development, cheer!!!